A spotted dove family decide to move into the windowsill area attached to my room, so I decided to put them on the internet.

I’d always wanted to make a webcam livestream, but never got around to it. In the span of a few days, I went from zero, to:

- Self-hosted livestream with motion

- Python program to automatically post to Mastodon

- ffmpeg streaming to YouTube and exposing an RTMP endpoint for other services to consume

This is how I did it.

0. Webcam

Didn’t want to spend a lot of money, as I wasn’t sure this was going to work, and maybe the thing would be outside. I knew that whatever I got needed to work with Linux, so I was hoping to find some Logitech thing. Unfortunately, when I got to my local 3C store, I found their selection of Logitech webcams to be quite expensive (US$50+). Their cheapest webcam on the shelf was this model, the E-books W16. I did some research in the store, and couldn’t confirm Linux compatibility. I explained my requirements to a helpful sales associate there, he said if it doesn’t work, and I keep everything together, I could bring it back. Cool.

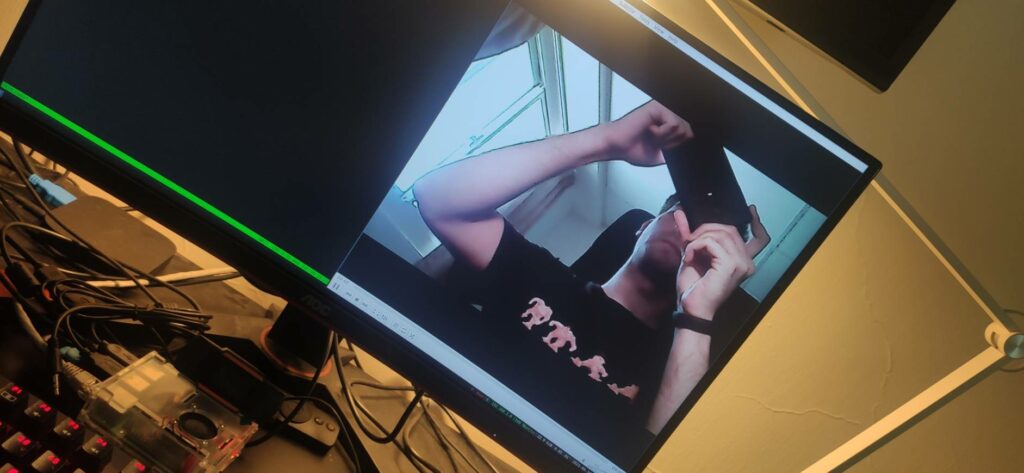

So I brought it home, connected it to my Raspberry Pi to test and:

Success! Native support in Linux. I was happy.

1. Mounting

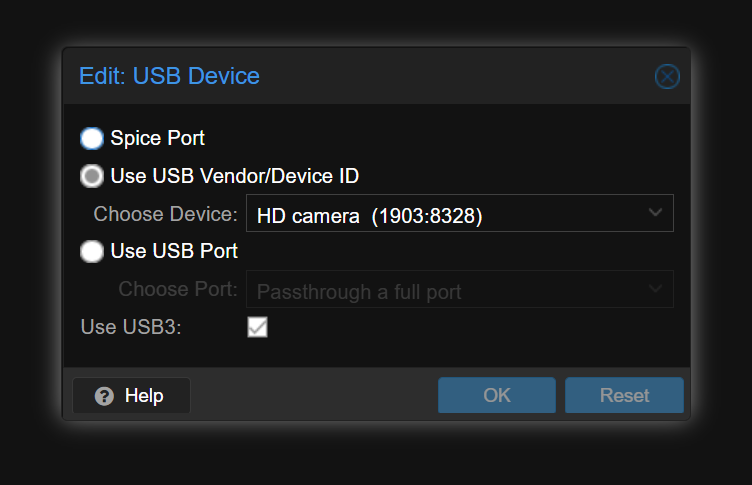

2. Proxmox

I wanted whatever server I was going to run to run in my Kubernetes cluster. This meant that I needed to attach the webcam to the VM running my Kubernetes node. Fortunately this was very easy to do in Proxmox, just add it like any other hardware:

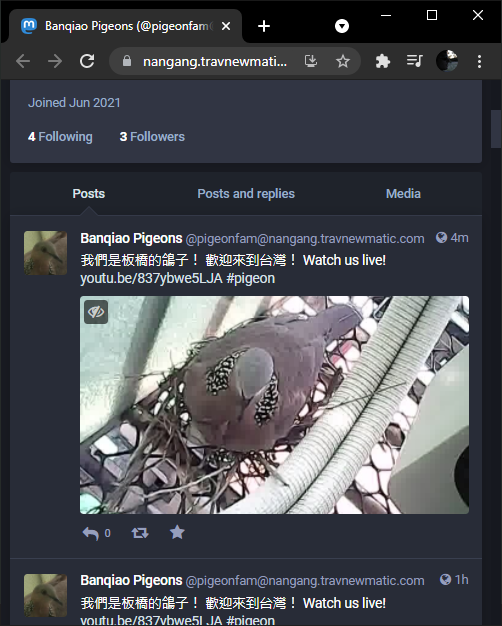

3. motion + Python mastodon bot

I’m very new to streaming stuff online. Early in my research, I came across https://motion-project.github.io/. Motion makes it super simple to put webcam video on the internet. It hosts a little webpage and it works well. It has all kinds of other advanced features, including motion detection, and creating time-lapse videos. I hope to play with it more, but initially, my first use was to create a simple stream, which I did quite quickly inside of Kubernetes. By default, motion will automatically look for your webcam at /dev/video0.

The next thing I wanted to do was to create a bot, to periodically post a picture to my Mastodon instance (inspired by Koopa). I configured motion to take a picture every hour, and run a program to ‘toot’ the picture to when it does. This was very easy to do. Actually, I rolled this up into a Docker image: https://hub.docker.com/r/travnewmatic/motion-mastodon-bot (I need to add more documentation, I know). It’s not visible in this Deployment manifest, but if motion needs access to /dev/video0, the pod needs to run with securityContext privileged, and make sure it runs on the node with the USB camera attached (I did this with ‘nodeName‘).

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: motion-mastodon-bot

name: motion-mastodon-bot

namespace: webcam

spec:

replicas: 1

selector:

matchLabels:

app: motion-mastodon-bot

template:

metadata:

labels:

app: motion-mastodon-bot

spec:

containers:

- image: travnewmatic/motion-mastodon-bot:rtmp

env:

- name: instance

value: "https://nangang.travnewmatic.com"

- name: token

value: "xxxxxxxxx"

- name: message

value: "我們是板橋的鴿子! 歡迎來到台灣! Watch us live! https://youtu.be/837ybwe5LJA #pigeon"

imagePullPolicy: Always

name: motion-mastodon-bot

volumeMounts:

- mountPath: /var/lib/motion

name: snapshots

ports:

- containerPort: 8080

name: control

protocol: TCP

- containerPort: 8081

name: stream

protocol: TCP

volumes:

- name: snapshots

emptyDir: {}

travnewmatic/motion-mastodon-bot takes a few environment variables:

- the URL of the Mastodon instance you want to post to

- the token of your account

- the message you want to include with the picture

- (optionally) the snapshot_interval, which is set to 3600 seconds by default

The tooting part was super simple:

#!/usr/bin/python3

from mastodon import Mastodon

import os

token = os.getenv('token')

instance = os.getenv('instance')

message = os.getenv('message')

# Set up Mastodon

mastodon = Mastodon(

access_token = token,

api_base_url = instance

)

media = mastodon.media_post("/var/lib/motion/lastsnap.jpg", mime_type="image/jpeg")

mastodon.status_post(message, media_ids=media)One nice thing motion does: in the snapshot directory, it maintains a symlink ‘lastsnap.jpg’ pointing to the most recent snapshot. Pretty neat 🙂

4. ffmpeg

Pretty quickly, I ran into a problem. I could only have one thing using /dev/video0 at a time. This was not ideal. So after A LOT of experimentation, I came up with this:

ffmpeg -i /dev/video0 \

-f flv \

-rtmp_live live \

-listen 2 \

rtmp://:1935Looks pretty simple now, but:

- i’m an idiot

- ffmpeg is hard

This little bit was enough to expose /dev/video0 for other services to consume. So, I made a slightly modified version of my motion container to use this RTMP stream instead of the /dev/video0 device:

# Full Network Camera URL. Valid Services: http:// ftp:// mjpg:// rtsp:// mjpeg:// file:// rtmp://

netcam_url rtmp://ffmpeg-rtmp.webcam.svc.cluster.localThat URL looks the way it does because thats how hostnames (can) look inside Kubernetes.

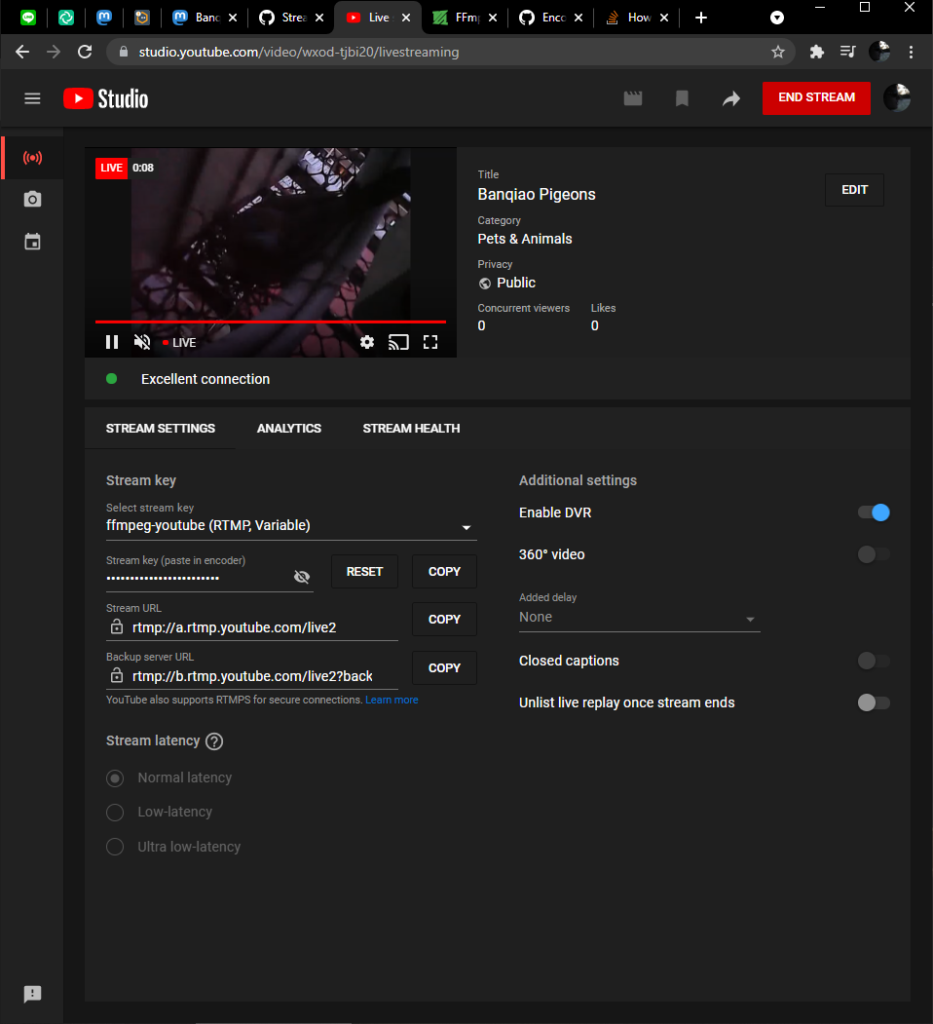

5. YouTube

My functional, albeit choppy, pigeoncam stream was working, and posting to Mastodon was working. But I was a little annoyed that the webcam could do slightly better video than what motion was showing. My first approach was to make another ffmpeg to pull from the first ffmpeg and send to YouTube. I wasn’t having much luck with that. Fortunately, I discovered that ffmpeg can have multiple outputs! In my ffmpeg/youtube research, I came across some discussion about YouTube requiring some audio component of the stream, even if there’s no sound, so I included that in my ffmpeg command:

ffmpeg -i /dev/video0 \

-f flv \

-rtmp_live live \

-listen 2 \

rtmp://:1935 \

-f lavfi \

-i anullsrc \

-f flv \

-rtmp_live live \

-c:v libx264

rtmp://a.rtmp.youtube.com/live2/supersecretyoutubekey

ffmpeg deployment manifest:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: ffmpeg-rtmp

name: ffmpeg-rtmp

namespace: webcam

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: ffmpeg-rtmp

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: ffmpeg-rtmp

spec:

containers:

- command:

- ffmpeg

- -i

- /dev/video0

- -f

- flv

- -rtmp_live

- live

- -listen

- "2"

- rtmp://:1935

- -f

- lavfi

- -i

- anullsrc

- -f

- flv

- -rtmp_live

- live

- -c:v

- libx264

- rtmp://a.rtmp.youtube.com/live2/supersecretyoutubetoken

image: jrottenberg/ffmpeg

imagePullPolicy: Always

name: ffmpeg

ports:

- containerPort: 1935

name: rtmp

protocol: TCP

resources: {}

securityContext:

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /dev/video0

name: webcam

dnsPolicy: ClusterFirst

nodeName: k3s1-node1

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /dev/video0

type: ""

name: webcamConclusion, References

So that’s where things are right now. I wanted a way to document and share the progress of my new avian neighbors with the world, and I’m quite proud of what I’ve been able to accomplish. Share the stream with your friends!

- motion-mastodon-bot Github repo

- how i got started with motion: https://www.instructables.com/How-to-Make-Raspberry-Pi-Webcam-Server-and-Stream-/

- Had the magic command to get me going with ffmpeg: https://installfights.blogspot.com/2019/01/webcam-streaming-throught-vlc-with-yuy2.html

- Make a mastodon bot with Python: https://shkspr.mobi/blog/2018/08/easy-guide-to-building-mastodon-bots/

- Configure motion: https://motion-project.github.io/motion_config.html

- Consume host device with pod: https://stackoverflow.com/a/42716234

- ffmpeg + youtube + audio: https://superuser.com/a/1537250

Leave a Reply